Implicit neural representations such as Neural Radiance Field (NeRF) have focused mainly on modeling static objects captured under multi-view settings where real-time rendering can be achieved with smart data structures, e.g., PlenOctree. In this paper, we present a novel Fourier PlenOctree (FPO) technique to tackle efficient neural modeling and real-time rendering of dynamic scenes captured under the free-view video (FVV) setting. The key idea in our FPO is a novel combination of generalized NeRF, PlenOctree representation, volumetric fusion and Fourier transform. To accelerate FPO construction, we present a novel coarse-to-fine fusion scheme that leverages the generalizable NeRF technique to generate the tree via spatial blending. To tackle dynamic scenes, we tailor the implicit network to model the Fourier coefficients of time-varying density and color attributes. Finally, we construct the FPO and train the Fourier coefficients directly on the leaves of a union PlenOctree structure of the dynamic sequence. We show that the resulting FPO enables compact memory overload to handle dynamic objects and supports efficient fine-tuning. Extensive experiments show that the proposed method is 3000 times faster than the original NeRF and achieves over an order of magnitude acceleration over SOTA while preserving high visual quality for the free-viewpoint rendering of unseen dynamic scenes.

Pipeline

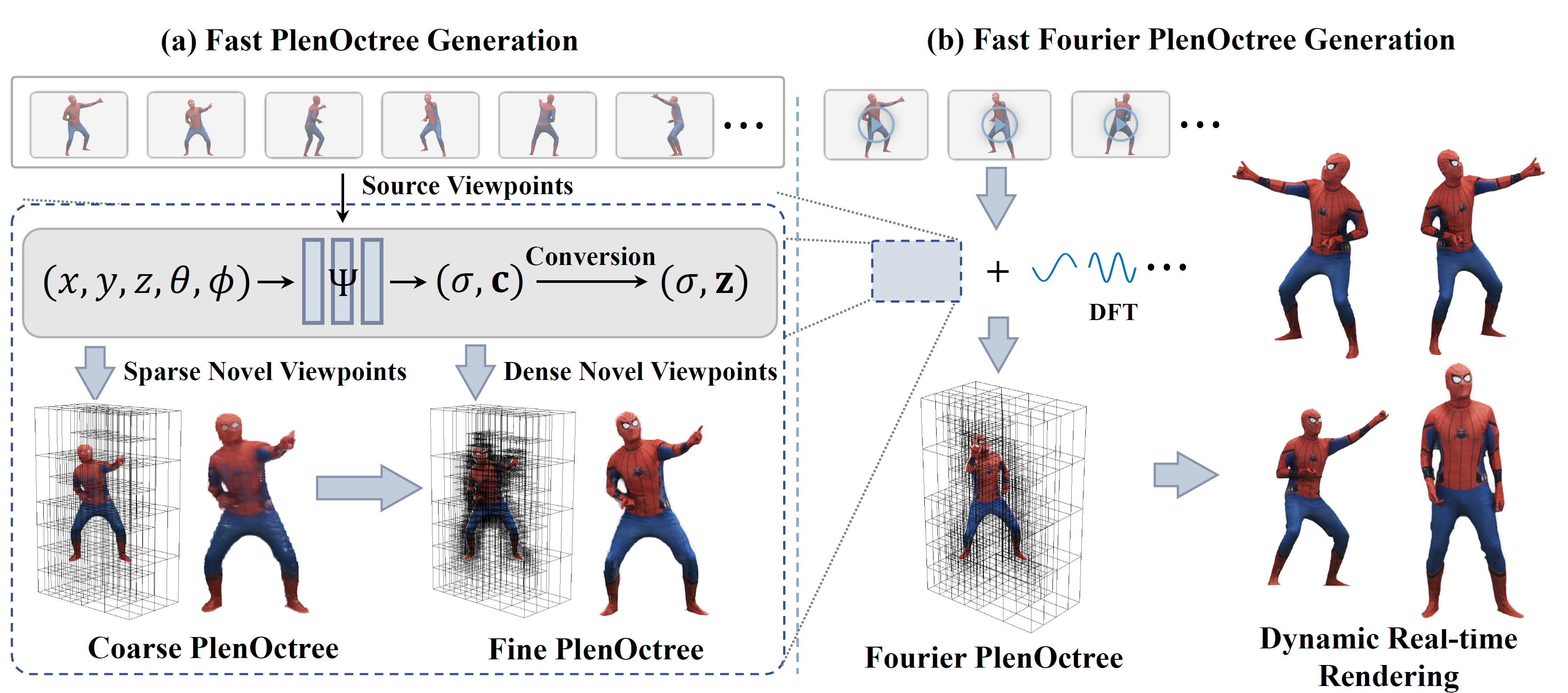

Illustration of our fast PlenOctree generation for static scene and fast Fourier PlenOctree generation for dynamic scene. (a) illustrates how to generate a PlenOctree from multiview images. A generalized NeRF Ψ predicts view-dependent density σ and color c by inputting 3D sample point (x, y, z) with view direction (θ, φ) and source views, then we can convert them to view-independent density σ and SH coefficients z. Using sparse view RGB images rendered by Ψ, we can obtain a coarse PlenOctree. Finally we fine-tune it to be a fine Plenoctree by using dense view images. (b) extends the pipeline to dynamic scene by combining the idea with Discrete Fourier Transform(DFT) and achieves a fast Fourier PlenOctree generation and real-time rendering for dynamic scenes.

Results

The rendered appearance results of our Fourier PlenOctree method on several sequences, including humans, human with objects and animals.

Bibtex

Zhang, Yanshun and Zhang, Yingliang and Wu, Minye and Yu, Jingyi and Xu, Lan}, title = {Fourier PlenOctrees for Dynamic Radiance Field Rendering in Real-Time}, booktitle = {Proceedings of the IEEE/CVF Conference on Computer Vision and

Pattern Recognition (CVPR)}, month = {June}, year = {2022}, pages = {13524-13534} }